Cho, Junghoo and Garcia-Molina, Hector (2001) Parallel Crawlers. Technical Report. Stanford.

![[img]](/style/images/fileicons/application_pdf.png)  Preview |

| PDF

123Kb |

Abstract

In this paper we study how we can design an effective parallel crawler. As the size of the Web grows, it becomes imperative to parallelize a crawling process, in order to finish downloading pages in a reasonable amount of time. We first propose multiple architectures for a parallel crawler and identify fundamental issues related to parallel crawling. Based on this understanding, we then propose metrics to evaluate a parallel crawler, and compare the proposed architectures using 40 million pages collected from the Web. Our results clarify the relative merits of each architecture and provide a good guideline on when to adopt which architecture.

| Item Type: | Techreport (Technical Report) |

|---|

| Uncontrolled Keywords: | Web crawler, parallalism, distributed crawler |

|---|

| Subjects: | Computer Science > Databases and the Web |

|---|

| Projects: | Digital Libraries |

|---|

| Related URLs: | Project Homepage | http://www-diglib.stanford.edu/diglib/pub/ |

|---|

| ID Code: | 505 |

|---|

| Deposited By: | Import Account |

|---|

| Deposited On: | 02 Oct 2001 17:00 |

|---|

| Last Modified: | 27 Dec 2008 09:40 |

|---|

Available Versions of this Item

- Parallel Crawlers. (deposited 02 Oct 2001 17:00) [Currently Displayed]

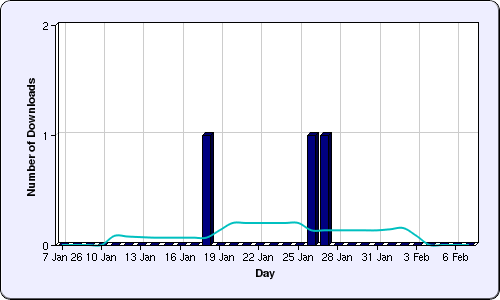

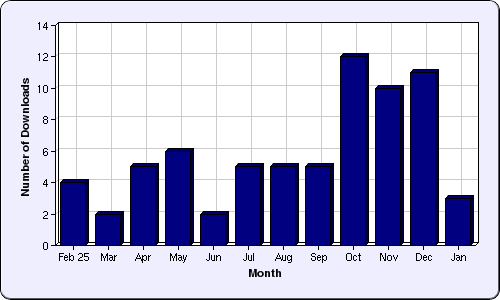

Download statistics

Repository Staff Only: item control page